About MARSS

About the Project

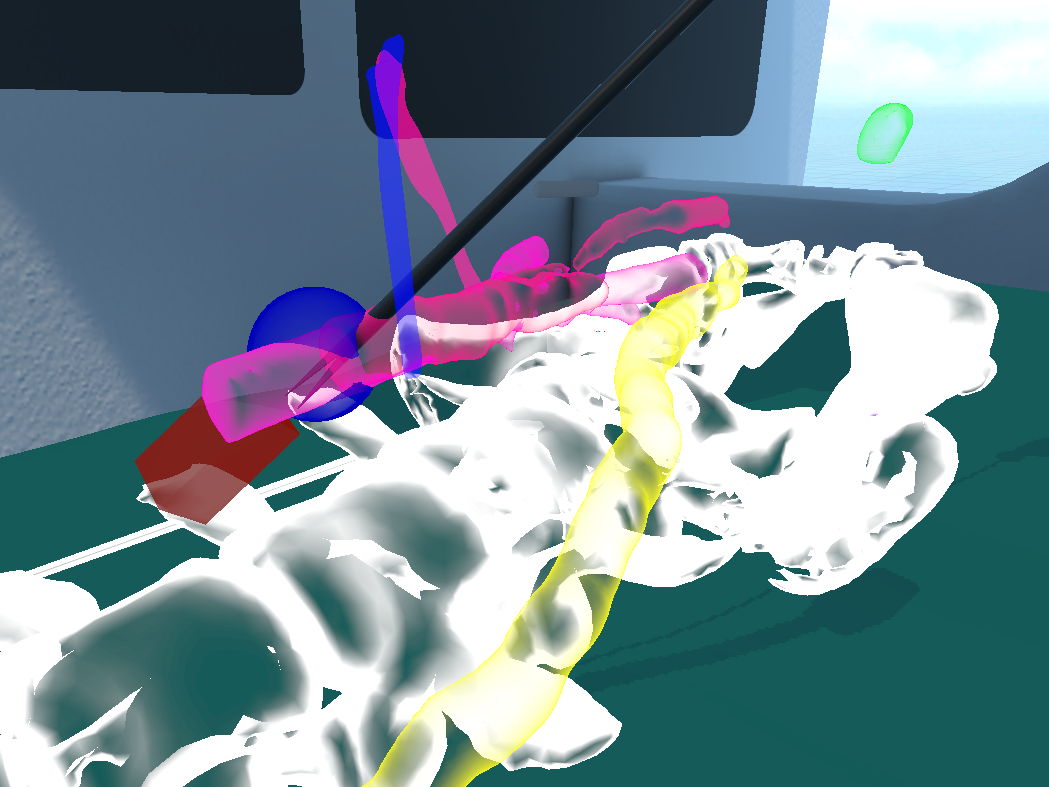

We were tasked with developing a medical mixed reality simulation integrating haptic and auditory augmentations for laproscopic procedures. The MARSS staff provided a demo Unity scene containing procine anatomy and the laparoscopic tools’ pose were controlled by pre-recorded tracking data from an in vivo procedure on a pig abdomen.

Background:

Human perception during minimally invasive surgery is often limited, making these procedures, despite their many advantages, less than optimal and highly challenging for surgeons. This project aims to improve surgical performance by providing comprehensive multimodal feedback on both the anatomical structures and the surgeon’s interaction with them through surgical tools.

Goal:

- Develop an AR application for multimodal interaction with anatomical structures in AR laparoscopy.

- Design and implement multimodal feedback, particularly auditory and haptic, for enhancing tool-tissue interaction.

My Contributions

I implemented the Finger-Proxy haptic rendering algorithm (Ruspini et al., 1997) in Unity and used the computed forces to control a custom servo-driven haptic device (“Palmer, et al., 2022) via serial communication, enabling real-time tactile interaction with virtual objects.

The project’s GitHub repository is private pending permissions from other collaborators

Final Demo Video

Skills

Mixed Reality, Medical Simulaitons, Unity, C#, Haptic Rendering, Arduino, C++, 3D Printing